April 2010: We have two papers accepted for CVPR 2010.

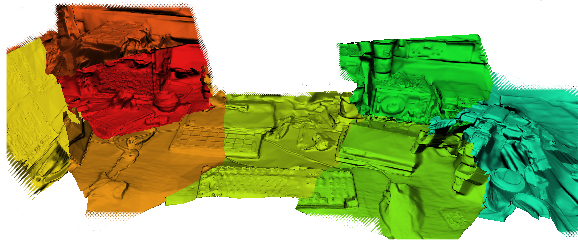

Richard Newcombe and I have developed a breakthrough method for live dense reconstruction. Our system, which requires just a single standard hand-held video camera attached to a powerful PC, is capable of automatic reconstruction of a desktop-scale scene within just a few seconds as the live camera browses the scene. Without markers or any other infrastructure, the camera’s pose is tracked and a 3D point reconstruction made in real-time using monocular SLAM (currently we use Klein and Murray’s PTAM). Automatically selected bundles of video frames with pose estimates are then used to generate highly detailed depth maps via dense matching and triangulation, and these are then joined into a globally registered dense mesh reconstruction of the scene’s surfaces. This reconstruction is of a level of detail and quality which has not previously been approached

by any similar real-time system. The system works in standard everyday scenes and lighting conditions, and is able to reconstruct the details of objects of arbitrary geometry and topology and even those with remarkably low levels of surface texture. The main technical novelty is to use an approximate but smooth base mesh generated from point-based SLAM to enable `view-predictive optical flow’: this permits the FlowLib library from Graz University of Technology, running on the GPU, to obtain high precision dense correspondence over the baseline we need for detailed reconstruction. In the video linked below we demonstrate advanced augmented reality where augmentations interact physically with the 3D scene and are correctly clipped by occlusions. We plan to demonstrate the system live at CVPR, and further information is available on Richard’s page about the work.

Live Dense Reconstruction with a Single Moving Camera (PDF format),

Richard A. Newcombe and Andrew J. Davison, CVPR 2010.

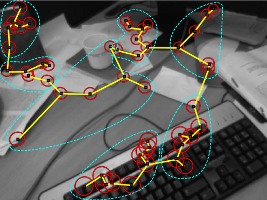

And Ankur Handa, Margarita Chli, Hauke Strasdat and I will present new work which extends our previous concept of Active Matching and presents algorithms which make it now applicable as the matching engine in real-time systems aiming at robust matching of hundreds of features per frame. In the Active Matching paradigm, feature matching (within SLAM or similar systems) is performed in a guided, one-by-one manner, where a joint prior on correspondence locations is used to guide incremental image processing and the search for matching consensus. Each feature match improves the predictions of all subsequent feature searches, and decisions are guided by information theoretic measures rather than the random sampling and fixed thresholds of RANSAC or similar. The original AM algorithm (Chli and Davison, ECCV 2008, see below) maintained a joint covariance over the current estimated correspondence locations, and the necessary update of this representation after every feature match limited scalability to a few tens of features per frame in real-time. The new development in our Scalable Active Matching work is to use a general graph-theoretic model of the structure of correspondence priors, and then use graph sparsification either to a tree or a tree of subsets in order to dramatically speed up computation in new algorithms called CLAM and SubAM. The video illustrates the methods in operation to match hundreds of features per frame in the context of our new keyframe SLAM system.

Scalable Active Matching (PDF format),

Ankur Handa, Margarita Chli, Hauke Strasdat and Andrew J. Davison, CVPR 2010.

AVI

AVI AVI

AVI