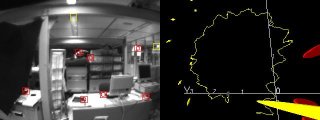

April 2005: New results in using MonoSLAM perform real-time visual SLAM with the humanoid robot HRP-2. This work was done in collaboration with Olivier Stasse during my visit to the Joint Japanese-French Research Lab (JRL), AIST, Japan. Image capture was via a wide-angle firewire camera fitted to the robot (the robot’s standard trinocular rig was not used due to its limited field of view), and vision and SLAM processing was on-board using the robot’s internal Linux PC. Real-time GUI and graphical output were achieved over a wireless CORBA link to a separate workstation. The videos below show external and SLAM views of a motion where the robot walked in a small circle around the lab, detecting and mapping natural point features autonomously and re-detecting early features to close the loop at the end of the trajectory (a substantial correction to the map is seen at this stage). The swaying motion of the robot during walking can be clearly seen in the trajectory recovered by SLAM. Improved loop-closing performance was achieved by also integrating output from the three-axis gyro in the robot’s chest to reduce orientation uncertainty.

MPEG

MPEG MPEG

MPEG