Imperial PWP | dblp | Google Scholar | CV | Publications | linkedin | ResearchGate | Twitter | orcid.org/0000-0002-7813-5023

I am a Professor in the Department of Computing at Imperial College London. I head the human-in-the-loop computing group and I am one of four academics leading the Biomedical Image Analysis, BioMedIA Collaboratory. Human-in-the-loop computing research aims at complementing human intelligence with machine capabilities and machine intelligence with human flexibility.

I co-create intensively with King’s College London, Division of Imaging Sciences and Biomedical Engineering, St. Thomas Hospital London and the department of Bioengineering at Imperial. I am Associate Editor for IEEE Transactions on Medical Imaging and Medical Image Analysis and a scientific adviser for ThinkSono Ltd and co-founder of Fraiya Ltd. I am stream lead for the EPSRC Centre for Doctoral Training in Smart Medical Imaging and involved in the UKRI Centre for Doctoral Training in Artificial Intelligence for Healthcare.

My research is about intelligent algorithms in healthcare, especially Medical Imaging. I am working on self-driving medical image acquisition that can guide human operators in real-time during diagnostics. Artificial Intelligence is currently used as a blanket term to describe research in these areas.

Current research questions:

Can we democratize rare healthcare expertise through Machine Learning, providing guidance in real-time applications and second reader expertise in retrospective analysis?

Can we develop normative learning from large populations, integrating imaging, patient records and omics, leading to data analysis that mimics human decision making?

Can we provide human interpretability of machine decision making to support the ‘right for explanation’ in healthcare?

My teaching is focused on real-time computing, Machine Learning, Image Analysis, Computer Graphics and Visualisation.

For my research I am using Nvidia and Intel hardware (thank you for the donations!).

PhD Opportunities

| I am currently only looking for outstanding PhD students who are interested in working on human interpretable machine learning and efficient medical image processing topics for deep learning applications.My definition of ‘outstanding’: You did amazing things in the past that had real impact.If you are interested in applying for a PhD position in my group you can apply through the Imperial College PhD online appplication system.

We have a number of PhD projects on offer within the EPSRC Centre for Doctoral Training in Medical Imaging and the UKRI Centre for Doctoral Training in AI for Healthcare Interested? Find out ‘how to apply’ for the medical imaging CDT and ‘here’ for the UKRI AI4 Health centre. |

|

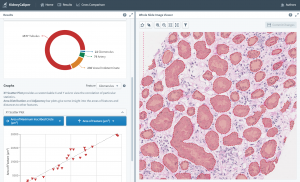

In digital pathology, demand is much higher than supply, thus, there is a real risk that disease is missed.

KidneyCaliper is a computational pathology project that applies deep learning image analysis to microscope slides of kidney biopsies. We believe that high throughput browser-based content analysis of digitised pathology slides will allow for early prediction of kidney transplant rejection. A prototype has been developed here http://kidneycaliper.lucidifai.com/ but for larger scale clinical in-house deployment, we require local GPU acceleration that can be operated behind clinic firewalls (patient data protection!) to test clinical hypothesis about kidney transplant rejection on multi-modal patient data. Quantitative manual analysis of a single slide took over 5 hours, with our tools it can be done in less than 2h on Intel CPUs and less than 60s on Nvidia GPUs. 2 A6000 GPUs would be required to allow 8 pathologists to work in parallel on multi-modal data (image + omics).

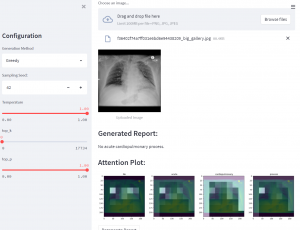

Chest radiographs are one of the most common diagnostic modalities in clinical routine. It can be done cheaply, requires minimal equipment, and the image can be diagnosed by every radiologists. However, the number of chest radiographs obtained on a daily basis can easily overwhelm the available clinical capacities. We propose RATCHET: RAdiological Text Captioning for Human Examined Thoraces. RATCHET is a CNN-RNN-based medical transformer that is trained end-to-end. It is capable of extracting image features from chest radiographs, and generates medically accurate text reports that fit seamlessly into clinical work flows. The model is evaluated for its natural language generation ability using common metrics from NLP literature, as well as its medically accuracy through a surrogate report classification task. The model is available for download at: http://www.github.com/farrell236/RATCHET.

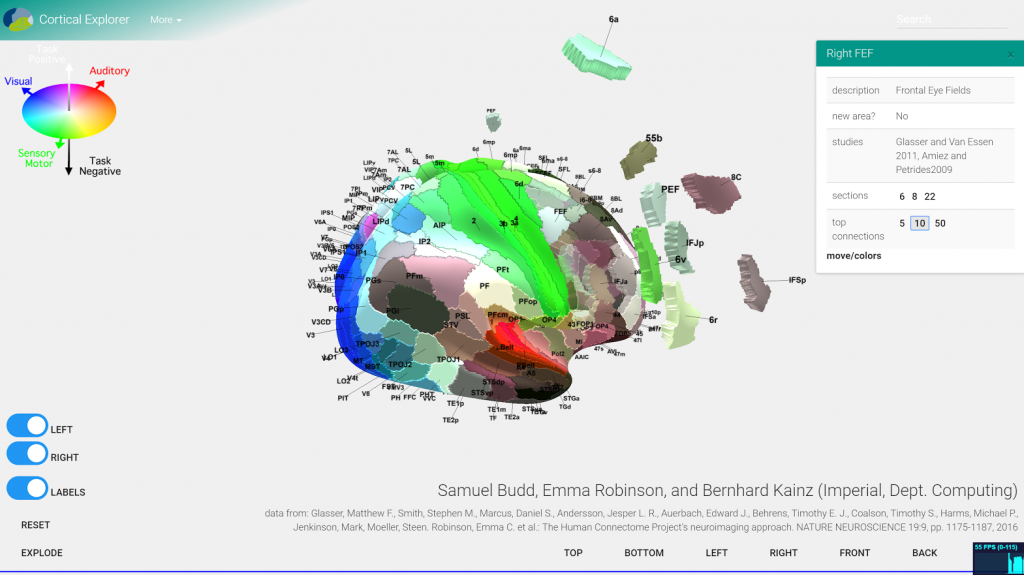

Ever wondered how the human brain works? Check out the Cortical Explorer by Sam Budd (Imperial MEng. final year project).

An early prototype Service-Oriented Architecture for cortical parcellation evaluation with multi-device, distributed front-end, i.e., a browser.

The various ways to navigate through our human brain map attracted a large crowd at the Imperial Intelligence redesigned Fringe on January 18th 2018. The cortical explorer currently also features on the touch screen at the front of the Data Science Institute.