The Future of Real-Time SLAM: Sensors, Processors, Representations, and Algorithms

News: we are in the process of uploading the talk slides here.

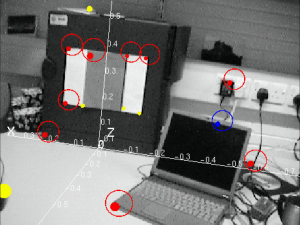

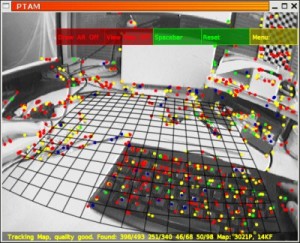

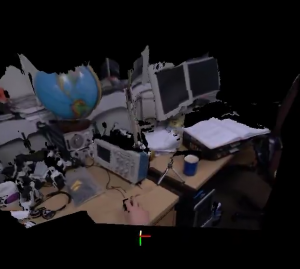

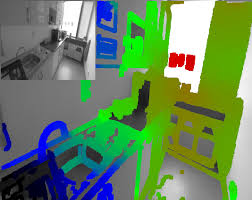

Real-time visual Simultaneous Localisation And Mapping (SLAM) has evolved rapidly in the past few years (see figures below for some history), and increasingly drawn the interest of industry for mass-market applications such as consumer robotics, Augmented or Virtual Reality (AR/VR) and mobile devices. Meanwhile, we as researchers believe that this area is evolving to target the generic real-time 3D scene perception capability that will be a core component of a new spatial computing paradigm for consumer electronics, as well as a key to embodied AI and general purpose robotics.

The availability of affordable new sensors and processing infrastructure have motivated paradigm shifts in SLAM algorithms and environment representations, and we can now estimate large-scale fully dense maps and localise in them in real-time. In this workshop, which leads on from the hugely successful workshop on Live Dense Reconstruction from Moving Cameras at ICCV 2011, we propose both to sketch the landscape of current state-of-the-art work in this area and the look to the future through a full programme of top quality invited talks and a lively real-time demo session.

Given how far we have come, we should be bold in predicting and planning future developments as innovations in algorithms, representations, sensors and processors come together to permit new capability such as dynamic reconstruction, continuous time tracking or ultra-high quality modelling in real-time. We will end the workshop with a panel discussion on questions such as: How will SLAM bridge the gaps between basic perception, estimation and artificial intelligence? What do we gain from dense maps and what are the implications for real-time performance? What are the most promising new directions and what elements in terms of representations, algorithms and hardware are missing?

Recent Comments