Multi-Object and Object-level Dynamic Mapping

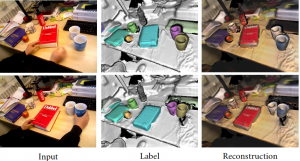

In this very much ongoing work, we are exploring segmentation and tracking of (rigid) objects into submaps.

In this very much ongoing work, we are exploring segmentation and tracking of (rigid) objects into submaps.

Current Collaborators:

- Binbin Xu

- Dr Ronnie Clark (Dyson Robotics Lab)

- Dr Michael Bloesch (Dyson Robotics Lab)

- Dr Sajad Saaedi (previously Robot Vision Group, now Dyson Robotics Lab)

- Prof. Andrew Davison

SemanticFusion

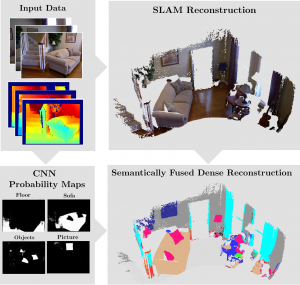

In SemanticFusion, we use a real-time capable dense RGB-D SLAM system, ElasticFusion, and add a semantic layer to it. In parallel to the localisation and mapping process, a CNN takes the same inputs (colour image and depth image), in order to output semantic segmentation predictions. We aggregate this semantic information in the map by means of Bayesian fusion. The work is significant for two reasons: first of all, such a real-time semantic mapping framework will play a core enabling role for future robots to perform more abstract reasoning, i.e. bridging the gap with AI, also in relation to intuitive user interaction. Second, we could experimentally show that the map serving as a means for semantic data association across many frames in fact boosts accuracy of 2D semantic segmentation — when compared to single-view predictions.

In SemanticFusion, we use a real-time capable dense RGB-D SLAM system, ElasticFusion, and add a semantic layer to it. In parallel to the localisation and mapping process, a CNN takes the same inputs (colour image and depth image), in order to output semantic segmentation predictions. We aggregate this semantic information in the map by means of Bayesian fusion. The work is significant for two reasons: first of all, such a real-time semantic mapping framework will play a core enabling role for future robots to perform more abstract reasoning, i.e. bridging the gap with AI, also in relation to intuitive user interaction. Second, we could experimentally show that the map serving as a means for semantic data association across many frames in fact boosts accuracy of 2D semantic segmentation — when compared to single-view predictions.

In more recent work, we have been exploring rather object-centric map representations

Current collaborators:

- Dr Ronnie Clark (Dyson Robotics Lab)

- Dr Michael Bloesch (Dyson Robotics Lab)

- Prof. Andrew Davison

Former collaborators:

- John McCormac (Dyson Robotics Lab)

- Dr Ankur Handa (previously Dyson Robotics Lab)

Datasets

Deep learning approaches are naturally data hungry. We are therefore working on a number of datasets, where imagery is syntethically generated through realistic rendering. Furthermore, we can use the datasets for evaluation of SLAM algorithms (pose and structure), as we have ground truth trajectories, maps, and also complementary sensing modalities available, such as IMUs.

- SceneNet RGB-D

- InteriorNet (full download available soon)

Current collaborators:

- Dr Wenbin Li (previously at Smart Robotics Lab, now Lecturer at University of Bath)

- Dr Sajad Saaedi (previously Robot Vision Group, now Dyson Robotics Lab)

- Prof. Andrew Davison

Former collaborators:

- Dr Ankur Handa (previously Dyson Robotics Lab)

- John McCormac (Dyson Robotics Lab)

Learning for SLAM, SLAM for Learning, and Learning SLAM

Current research efforts tackle some of the challenges and opportunities in leveraging deep learning in conjuction with SLAM: we propose to use learned features for more robust tracking, exploit learning for geometric priors, and we are exploring how to learn more efficient map representation for SLAM — or even if SLAM could / should be learned altogether.

Current collaborators:

- Binbin Xu

- Jan Czarnowski (Dyson Robotics Lab)

- Andrea Nicastro (Dyson Robotics Lab)

- Tristan Laidlow (Dyson Robotics Lab)

- Shuaifeng Zhi (Dyson Robotics Lab)

- Prof. Andrew Davison

Former collaborators:

- John McCormac (Dyson Robotics Lab)

- Dr Ankur Handa (previously Dyson Robotics Lab)