Dense RGB-D Mapping

In recent work with Thomas Whelan, myself, Renato Salas-Moreno, Ben Glocker and Andrew Davison, we perform RGB-D SLAM with both local and large-scale loop-closures where a dense surfel-map is aligned and deformed in real-time, in order to continuously improve the reconstruction consistency.

In recent work with Thomas Whelan, myself, Renato Salas-Moreno, Ben Glocker and Andrew Davison, we perform RGB-D SLAM with both local and large-scale loop-closures where a dense surfel-map is aligned and deformed in real-time, in order to continuously improve the reconstruction consistency.

As an extension, we also propose to use inertial measurements in the tracking step of ElasticFusion, which can be combined in a probabilistally meaningful way with photometric and geometric cost terms. Similarly, as the availability of acceleration measurements renders the gravity direction globally observable, we may include additional (soft) constraints in the map deformations, such that they remain consistent with gravity.

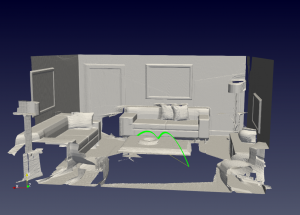

More recently, we have been exploring alternative map representations, such as octrees — which ideally lend themselves to efficient memory usage, fast access and spatial scalability, while they can be immediately interfaced with robotic motion planning (see green path in the picture on the right for an example).

More recently, we have been exploring alternative map representations, such as octrees — which ideally lend themselves to efficient memory usage, fast access and spatial scalability, while they can be immediately interfaced with robotic motion planning (see green path in the picture on the right for an example).- Emanuele Vespa (Performance Optimisation Group)

- Marius Grimm

- Nikolay Nikolov

- Prof. Paul Kelly (Performance Optimisation Group)

- Tristan Laidlow (Dyson Robotics Lab)

- Prof. Andrew Davison

Former collaborators:

- Dr Thomas Whelan (Dyson Robotics Lab, now Oculus Research)

Multi Level-of-Detail Reconstruction from Monocular SLAM

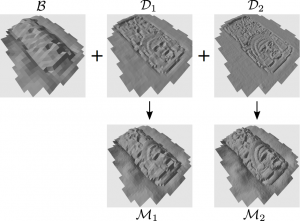

In dense mapping, we would like to reconstruct fine details, when the camera is close to structure, but maintain the ability to map more corsely, when it’s far away. We are thus working on efficient, probabilistic methods to maintain multiple levels of details, e.g. in a height-map reconstruction that is obtained using a sparse monocular SLAM algorithm for camera tracking paired with multi-view depth map reconstructions that are then used for map fusion.

In dense mapping, we would like to reconstruct fine details, when the camera is close to structure, but maintain the ability to map more corsely, when it’s far away. We are thus working on efficient, probabilistic methods to maintain multiple levels of details, e.g. in a height-map reconstruction that is obtained using a sparse monocular SLAM algorithm for camera tracking paired with multi-view depth map reconstructions that are then used for map fusion.

Current collaborators

- Zoe Landgraf (Dyson Robotics Lab)

- Prof. Andrew Davison

Former collaborators:

- Dr Jacek Zienkiewicz (now SLAMcore)

- Dr Akis Tziotsios (Dyson Robotics Lab)