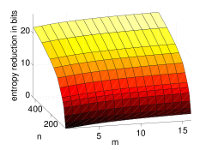

February 2010: A new paper in collaboration with Hauke Strasdat and J. M. M. Montiel has been accepted for publication at ICRA 2010, and will be presented in one of the prestigious “50 Years of Robotics” special sessions. We think that this is important work which makes a rigorous analysis of the relative merits of real-time monocular SLAM methods based either on filtering (like MonoSLAM or similar systems), or on keyframes and repeated optimisation (like Klein and Murray’s PTAM). Which approach provides the best local building block for a monocular SLAM map? Our analysis is based on a measure of accuracy in motion estimation relative to computational cost. It turns out that at current computation levels there is a clear winner: the optimisation method is preferable. This is because real accuracy in motion estimation comes from the use of a large number of feature correspondences, which is computationally much more feasible in the optimisation approach. Filtering on the other hand is better at incorporating information from a large number of camera poses, but this has relatively little effect on accuracy. So you can expect to see much more work based on optimisation coming from my group in the future… though we still think that filtering or hybrid approaches may have an important role to play in high uncertainty situations such as in bootstrapping camera tracking.

Real-Time Monocular SLAM: Why Filter? (PDF format),

Hauke Strasdat, J. M. M. Montiel and Andrew J. Davison, ICRA 2010 (Winner, Best Vision Paper).