PI: Azalea Raad, Co-I: John Wickerson, Imperial College London

- Non-volatile memory (NVM) is fast becoming mainstream.

- Therefore, we need to be able to write programs that exploit it, correctly and efficiently.

- In order to verify that these programs are correct, we need formal models of how CPUs interact with NVM, able to answer questions like “is my data guaranteed to become persistent in the same order as it is written to memory?”

- This project aimed at designed and implementing techniques for empirically validating these models against mainstream architectures such as Armv8 and Intel x86.

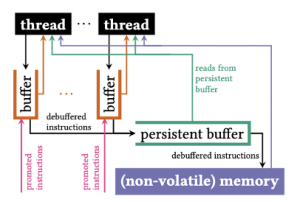

The emergence of non-volatile memory (NVM) is expected to revolutionise how software is written. NVM provides storage persistence across power failures, yet offers performance close to that of traditional (volatile) memory. As such, programs that require data persistence (e.g. databases) can achieve an orders-of-magnitude lower latency by storing their data on NVM rather than on standard disks. It is widely believed that NVM will supplant volatile memory entirely, allowing efficient access to persistent data.

However, writing correct persistent programs is a difficult task. A key challenge for the programmer is correctly accounting for the “persistency semantics” of the hardware, which describes the order in which writes performed by the CPU become persistent. Volatile caches between the CPU and the NVM can make persistency semantics quite counterintuitive, and motivates the need for formal verification.

Prior work has developed models of how persistency works in mainstream hardware such as Arm and Intel-x86. These models have been developed by studying specifications and interviewing designers, but have not yet been validated empirically on real-world hardware.

This project aimed at addressing such shortcomings. Validating persistency models on hardware is challenging because in order to test whether data is truly persistent we need to power-cycle the test machine, yet doing so for every test-case would led to infeasibly low testing throughput.

To get around this difficulty, we obtained a specialised hardware device called a “DDRDetective”, which was able to monitor the data traffic to and from the persistent memory while our test-cases are and can be used to to validate the persistency models for the Armv8 and Intel-x86 architectures.

PUBLICATION. “Foundations of Persistent Programming”, Dagstuhl Reports, Vol. 11, Issue 10, pp. 94–110, 2022

RELATED GRANTS. This project is also being partially funded by the EPSRC Programme Grant “IRIS: Interface Reasoning for Interacting Systems” (£6.1M, 2018–2023).

IMPACT. “The correctness of programs that exploit non-volatile memory is an important challenge to Arm. The formal semantics for how persistent memory works will be key in addressing this challenge. Practical methods to run litmus test for persistent memory empirically on real chips would help validate microarchitectural implementations of Arm persistency models.” – Andrea Kells, Director, Research Ecosystem, Arm Ltd. –