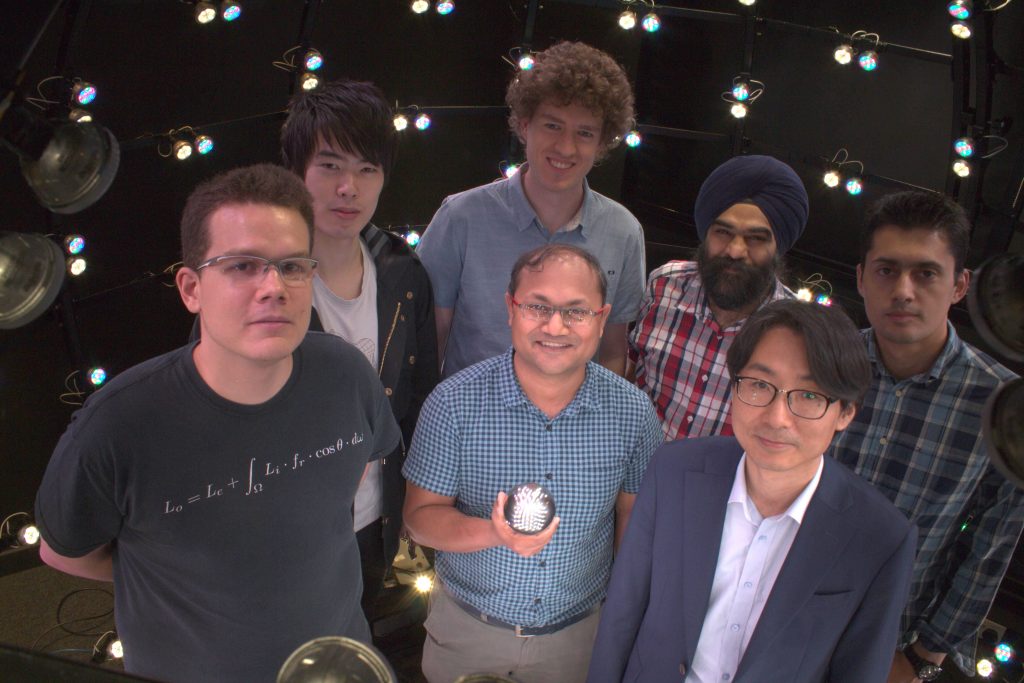

Welcome to the Realistic Graphics and Imaging group in the Department of Computing at Imperial College London. We conduct research in realistic computer graphics spanning acquisition, modeling and rendering of real world materials, objects and scenes, as well as imaging for graphics and vision including computational photography and illumination. We are affiliated to the Visual Computing research theme within DOC.